The healthcare industry stands at the precipice of a data revolution, where artificial intelligence promises to transform diagnostics, treatment planning, and patient outcomes. Yet this potential remains largely untapped due to one persistent barrier: patient privacy. Traditional approaches to developing medical AI require centralizing sensitive health data, creating unacceptable risks and regulatory challenges. Now, an emerging paradigm called federated learning is rewriting the rules of medical AI collaboration while keeping data safely behind institutional firewalls.

Breaking Down Data Silos Without Sharing Data

Federated learning represents a fundamental shift in how machine learning models get trained across multiple healthcare organizations. Unlike conventional methods that pool datasets into a central repository, this distributed approach allows hospitals, research institutions, and pharmaceutical companies to collaboratively improve models without ever exchanging raw patient data. "It's like having a hundred chefs perfect the same recipe, each working in their own kitchen with different ingredients, but never revealing their secret formulas," explains Dr. Elena Rodriguez, a biomedical informatics researcher at Massachusetts General Hospital.

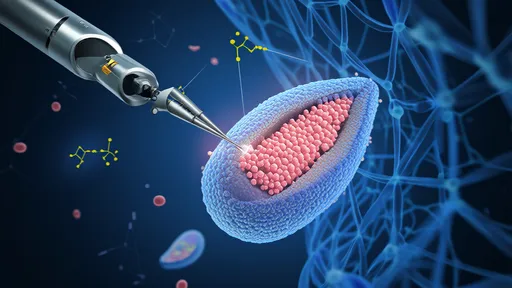

The technical process works through an iterative dance between participating institutions and a coordinating server. Each institution trains the model locally using its own patient data, then sends only the model updates - never the underlying data - to be aggregated with others' contributions. This refined model then gets sent back to all participants, progressively improving through multiple rounds of this privacy-preserving collaboration.

Real-World Applications Showing Promise

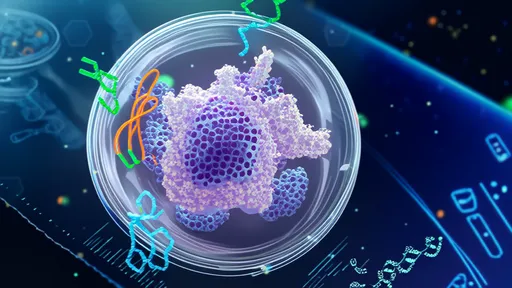

Early implementations demonstrate federated learning's potential across various medical specialties. A consortium of European hospitals recently completed a federated study to improve brain tumor segmentation models, achieving diagnostic accuracy comparable to models trained on centralized data while maintaining strict patient confidentiality. In the United States, the National Institutes of Health has funded several pilot projects applying federated approaches to rare disease research, where data scarcity has traditionally hampered progress.

Perhaps most compelling are the COVID-19 related applications that emerged during the pandemic. With urgent need for predictive models but no time for lengthy data-sharing agreements, federated networks allowed hospitals worldwide to collaboratively develop algorithms for predicting patient deterioration, ICU needs, and long-term complications. "The pandemic forced us to innovate at unprecedented speed," notes Dr. Raj Patel of Johns Hopkins. "Federated learning became our only viable option for international collaboration without violating various countries' data protection laws."

The Regulatory Advantage

Healthcare organizations operate under some of the strictest privacy regulations across all industries - from HIPAA in the United States to GDPR in Europe. Federated learning's architecture provides inherent compliance benefits that traditional data-sharing approaches cannot match. Because patient data never leaves its home institution, the technology significantly reduces legal exposure and simplifies ethical review processes.

This regulatory friendliness has attracted attention beyond academic medical centers. Pharmaceutical companies, constrained by competitive concerns and patient privacy requirements, are exploring federated learning for drug discovery and clinical trial optimization. Health insurers see potential for developing better risk models while maintaining member confidentiality. Even medical device manufacturers are incorporating federated approaches to improve their AI-powered products using real-world data from multiple hospital customers.

Technical Challenges Remain

Despite its promise, federated learning in healthcare isn't without hurdles. The approach requires sophisticated coordination to handle "non-IID" data - the reality that different hospitals serve different patient populations with varying demographics, disease prevalence, and data collection methods. A model trained across wealthy urban academic centers might perform poorly when deployed at rural community hospitals unless these disparities get properly addressed.

Another challenge involves ensuring model explainability - the ability to understand why an AI system makes certain predictions. In highly regulated medical applications, clinicians need to trust and interpret model decisions. Some federated approaches can obscure the lineage of specific model behaviors, making validation more complex than with centrally trained systems.

The Road Ahead

As the technology matures, several developments could accelerate adoption. Standardization efforts led by groups like the Federated Learning Standards Initiative aim to create common frameworks for implementation. New cryptographic techniques such as secure multi-party computation and homomorphic encryption promise to further strengthen privacy guarantees. Meanwhile, cloud providers are rolling out managed federated learning services tailored to healthcare's unique requirements.

Perhaps most importantly, changing attitudes among historically competitive healthcare institutions suggest growing recognition that collaboration needn't come at the expense of patient privacy or institutional advantage. "We're seeing a cultural shift," observes MIT's Professor Susan Chen, who studies organizational behavior in healthcare. "Leaders now understand that federated learning lets them maintain control while contributing to something greater than any single institution could achieve alone."

The ultimate test will come as these systems scale beyond pilot projects. If federated learning can deliver on its promise of breaking down data silos while preserving privacy, it may finally unlock the collaborative potential needed to bring AI's full benefits to patients worldwide. For an industry balancing revolutionary technology with sacred ethical obligations, this privacy-preserving approach might just offer the best of both worlds.

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025